Glossary

| Term | Definition |

|---|---|

| A/B Testing (Split Testing) | Comparing two versions of an email to see which performs better. |

| CTR (Click-Through Rate) | The percentage of recipients who clicked a link within the email. |

| Conversion Rate | The percentage of users who completed a desired action after clicking an email link. |

| CTA (Call to Action) | A prompt that encourages users to take a specific action. |

| Segmenting | Dividing your email list into smaller, targeted groups. |

| Sample Size | The number of recipients in each A/B test group. |

| Open Rate | The percentage of delivered emails that were opened by recipients. |

Introduction

Email marketing is one of the most powerful tools to connect with your audience, drive sales, and grow your business. But with crowded inboxes and rising consumer expectations, just sending emails isn’t enough—you need to send the right ones.

That’s where A/B testing comes in.

A/B testing is the key to building smarter, more strategic campaigns. It allows you to make data-backed decisions, tailor content to your audience’s preferences, and consistently improve engagement and revenue.

This guide will walk you through everything you need to know about A/B testing—from what to test to how to track performance—so you can transform your email marketing results.

What is A/B Testing in Email Marketing?

A/B testing, also called split testing, is the process of comparing two variations of an email to determine which one performs better. Typically, you change one element (like the subject line or CTA), send each version to a portion of your audience, and analyze the results to see which drives better outcomes.

Why It Matters:

- Better engagement

- Higher conversions

- Improved customer understanding

- Greater ROI from each campaign

By focusing on what works and eliminating guesswork, A/B testing helps marketers fine-tune campaigns and scale what resonates.

Key Elements to A/B Test

The best tests are simple and focused. Choose one variable at a time to isolate results.

| Element | What to Test |

|---|---|

| Subject Line | Length, tone, personalization, urgency |

| Email Copy | Paragraph vs. bullet points, long vs. short, storytelling vs. direct sales |

| CTA | Button color, placement, wording, frequency |

| Images | Product vs. lifestyle, static vs. animated GIFs |

| Sender Name | Brand vs. personal (e.g., “Team Blossom” vs. “Emily from Blossom”) |

| Send Time | Weekday vs. weekend, morning vs. evening |

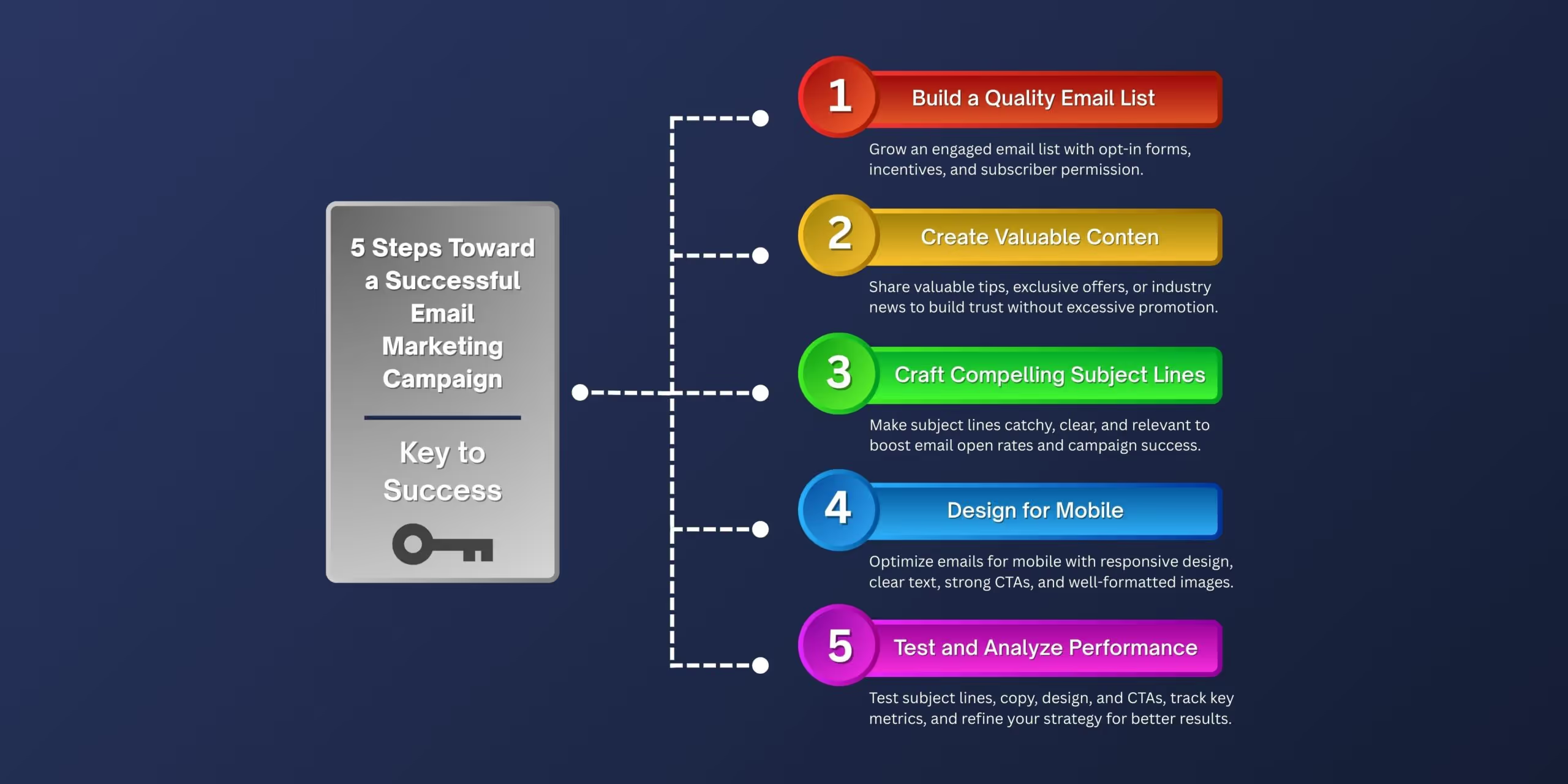

How to Set Up a Winning A/B Test

1. Set a Clear Goal

Before testing, define what success looks like. Are you aiming for:

- More opens?

- Higher CTR?

- Increased purchases?

2. Choose One Variable

Avoid testing too many elements at once. One change = clean data.

3. Segment Your Audience

Split your list evenly into two randomized groups. Make sure both are large enough for statistical significance.

4. Send the Variations

Deliver both versions simultaneously (unless you’re testing send time).

5. Monitor Results

Use your ESP or analytics platform to compare:

- Open Rate

- CTR

- Conversion Rate

6. Declare a Winner

Determine which version performed better—and why.

7. Scale and Refine

Send the winning email to the rest of your audience. Apply learnings to future campaigns.

Real-World A/B Testing Examples

| Test Type | Variation A | Variation B | Result |

|---|---|---|---|

| Subject Line | “Your 50% Off Ends Today” | “Final Hours: Claim Your Discount” | B increased open rate by 23% |

| CTA Button | “Shop Now” at bottom | “Shop Now” above fold | Above-the-fold CTA increased CTR by 35% |

| Send Time | Tuesday AM | Friday PM | Tuesday AM drove 18% more revenue |

Best Practices for A/B Testing

- Test regularly: Weekly or monthly tests keep your strategy evolving.

- Document results: Build a testing log to track wins and losses.

- Test meaningful differences: Make the variations distinct enough to generate useful insights.

- Segment further: Apply different tests to new subscribers, lapsed customers, and high-LTV segments.

- Optimize your winner: Winning emails can be further tested and refined over time.

Common A/B Testing Mistakes (and How to Avoid Them)

| Mistake | Solution |

|---|---|

| Testing too many elements | Stick to one variable per test |

| Small sample size | Use a larger audience to ensure significance |

| Inconclusive results | Extend the test duration or increase your audience size |

| Not acting on insights | Document and apply your findings |

Tools for A/B Testing

| Tool | Features |

|---|---|

| Klaviyo | Email A/B testing, flow optimization, advanced analytics |

| Mailchimp | Easy-to-use split testing, subject line analysis |

| Litmus | Email previews, performance tracking, render testing |

| Optimizely | Advanced experimentation for multi-channel testing |

Visual & Table Suggestions for SEO + UX

| Visual/Table | Purpose |

|---|---|

| Testing Flow Diagram | Helps readers understand the A/B workflow from setup to analysis |

| Testing Log Template | Encourages documentation of results for continuous improvement |

| Email Design Comparison | Visual side-by-side of two tested emails |

| Metrics Dashboard Mockup | Shows how to read and interpret test data visually |

Adding visuals like these will increase dwell time, boost SEO performance, and help readers retain more of your content.

Frequently Asked Questions

1. What’s the best element to test first?

Start with subject lines—they’re easy to test and can significantly impact open rates.

2. How big should my test audience be?

Aim for at least 1,000 recipients per variant for reliable data. Use a calculator to determine statistical significance.

3. How long should I run my A/B test?

Run tests for 24–72 hours depending on send volume. Avoid ending too early or during low-engagement periods.

4. Can I test more than two email versions?

Yes—this is called multivariate testing. It’s more complex and requires larger sample sizes.

5. Should I test with automated flows or just campaigns?

Both. Testing automated flows like welcome series or cart abandonments can yield huge long-term results.

6. What if my results are inconclusive?

Try testing a more distinct variable or increasing your audience size. Document everything—even failed tests provide insight.

Final Thoughts

A/B testing is one of the most powerful levers in your email marketing toolkit. It brings precision to your messaging, enables better decisions, and helps you understand your audience in ways that intuition alone never could.

Whether you’re optimizing a subject line or overhauling an entire campaign, the key is to stay curious, keep testing, and never stop improving.

At Blossom Ecom, we help brands design, test, and scale high-converting email strategies. Want to run smarter campaigns that drive results?

Let’s test, optimize, and grow—together.

Need help implementing this?

Let us take the hassle of managing your email marketing channel off your hands. Book a strategy call with our team today and see how we can scale your revenue, customer retention, and lifetime value with tailored strategies. Click here to get started.

Curious about how your Klaviyo is performing?

We’ll audit your account for free. Discover hidden opportunities to boost your revenue, and find out what you’re doing right and what could be done better. Click here to claim your free Klaviyo audit.

Want to see how we’ve helped brands just like yours scale?

Check out our case studies and see the impact for yourself. Click here to explore.

Read Our Other Blogs

Email Marketing vs. Social Media: Which One Should Your Brand Focus On?

Attract More Customers: Which Videos to Use in Your Email Marketing and Why

8 eCommerce Customer Service Mistakes You NEED to Stop Making (Like, Yesterday)

Not Sure Where to Start?

Let's find the biggest retention opportunities in your business. Get a free Klaviyo audit or retention consultation.